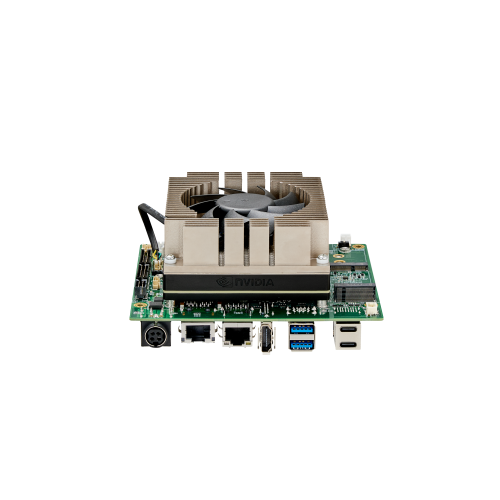

Compact Industrial Jetson Orin AGX Carrier With 10GbE

Key Features

- 10 Gigabit Ethernet

- -25°C to +80°C Operating Temp

- Wide DC Input

- 2 x Isolated CAN (Inc. CAN FD)

- 5 x GPIO

- Available Until 2032

Overview

NVIDIA Jetson AGX Orin module offers up to 275 TOPS, 8 times more than NVIDIA® Jetson AGX Xavier™ 32GB module. Built in up to 2048 NVIDIA® CUDA® cores and 64 Tensor Cores, NVIDIA Jetson AGX Orin module enables server-class AI inference at the edge with low latency.

Need Assistance?

- +44 (0)1527 512 400

Specifications

System

Module Compatibility:

NVIDIA Jetson AGX ORIN 32GB (AIB-MX13).

NVIDIA Jetson AGX ORIN 64GB (AIB-MX23).

AI Performance:

200 TOPS (AIB-MX13).

275 TOPS (AIB-MX23).

GPU:

1792-core NVIDIA Ampere GPU (56 Tensor Cores) (AIB-MX13).

2048-core NVIDIA Ampere GPU (64 Tensor Cores) (AIB-MX23).

CPU:

8-core Arm® Cortex®-A78AE v 8.2 64-bit CPU 2MB L2 + 4MB L3 (AIB-MX13).

12-core Arm® Cortex®-A78AE v 8.2 64-bit CPU 3MB L2 + 6MB L3 (AIB-MX23).

Memory:

32 GB 256-bit LPDDR5 204.8 GB/s (AIB-MX13).

64GB 256-bit LPDDR5 204.8 GB/s (AIB-MX23).

Storage:

64GB eMMC 5.1.

Expansion:

1 x M.2 B-Key 3042/3052 (LTE/4G/5G).

1 x M.2 E-Key 2230 (WiFi/BT).

1 x M.2 M-Key 2280 (Supports NVMe) (PCIe x4 Gen4).

1 x microSD Card Slot.

I/O

Display:

1 x HDMI 2.0 (Type A).

Audio:

Line-out/Line-in/Mic (Optional with daughter board).

Camera Input:

1 x 16-Lane MIPI Expansion Connector.

LAN:

1 x RJ-45 GbE port.

1 x RJ-45 10GbE port.

USB:

2 x USB 3.2 (Gen1) (Type A).

1 x OTG (Type-C).

1 x USB 3.2 (Gen2) (Type-C).

Other I/O Interfaces:

2 x I2C.

1 x I2S.

1 x SPI.

5 x GPIO.

1 x 3.3VDC/0.5A.

2 x 5VDC/0.5A.

1 x 12VDC/0.5A.

1 x USB 2.0.

2 x UART.

1 x UART (Debug only).

1 x RS-232.

1 x RS-422/485 (2-in-1).

2 x CAN 2.0b (Isolation) (Support CAN FD).

Power

Power Consumption:

6.655 W Idle / 52.25* W Full Loading (AIB-MX13).

6.9 W Idle / 72.25* W Full Loading (AIB-MX23).

*For more test condition information, please refer to user manual.

Power Input/Connector:

DC-in 9 to 36 VDC / 4-pin DC Jack Power Connector.

Environmental

Operating Temperature:

-25°C ~ +80°C (-13°F ~ +176°F).

Storage Temperature:

-40°C ~ +85°C (-40°F ~ +185°F).

Humidity:

95% @ 40°C (104°F) (Non-condensing).

Vibration:

1 Grms, IEC 60068-2-64, random, 5 ~ 500 Hz, 1 hr/axis.

Shock:

10 G, IEC 60068-2-27, half sine, 11 ms duration.

Certification:

CE / FCC Class A / UKCA.

Mechanical

Dimension (W x D x H):

131 x 120 x 62.9 mm (5.16 x 4.72 x 2.47 in).

Weight (Net):

0.701 kg (1.54 lb) With Fansink.

Other Features

Software Support:

Linux (Support Jetpack 5.0 above).

TPM:

TPM v2.0 (Optional).

RTC:

With super capacitor battery (Optional).

Documentation & Support

- Need Help? Get In Touch

- Talk to a solution specialist we're here to make sure you get your right fit solution.

- +44 (0)1527 512400

- computers@steatite.co.uk

- Check out our latest insights

Need a Custom Solution?

Get in touch with our technical specialists to discuss your specific requirements and see if we can offer a solution.